Tour Microsoft's Virtual Earth

353,470 views |

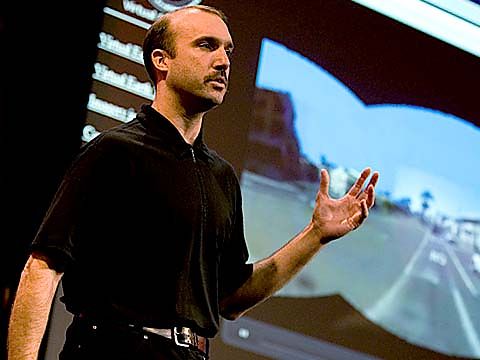

Stephen Lawler |

TED2007

• March 2007

Microsoft's Stephen Lawler gives a whirlwind tour of Virtual Earth, moving up, down and through its hyper-real cityscapes with dazzlingly fluidity, a remarkable feat that requires staggering amounts of data to bring into focus.