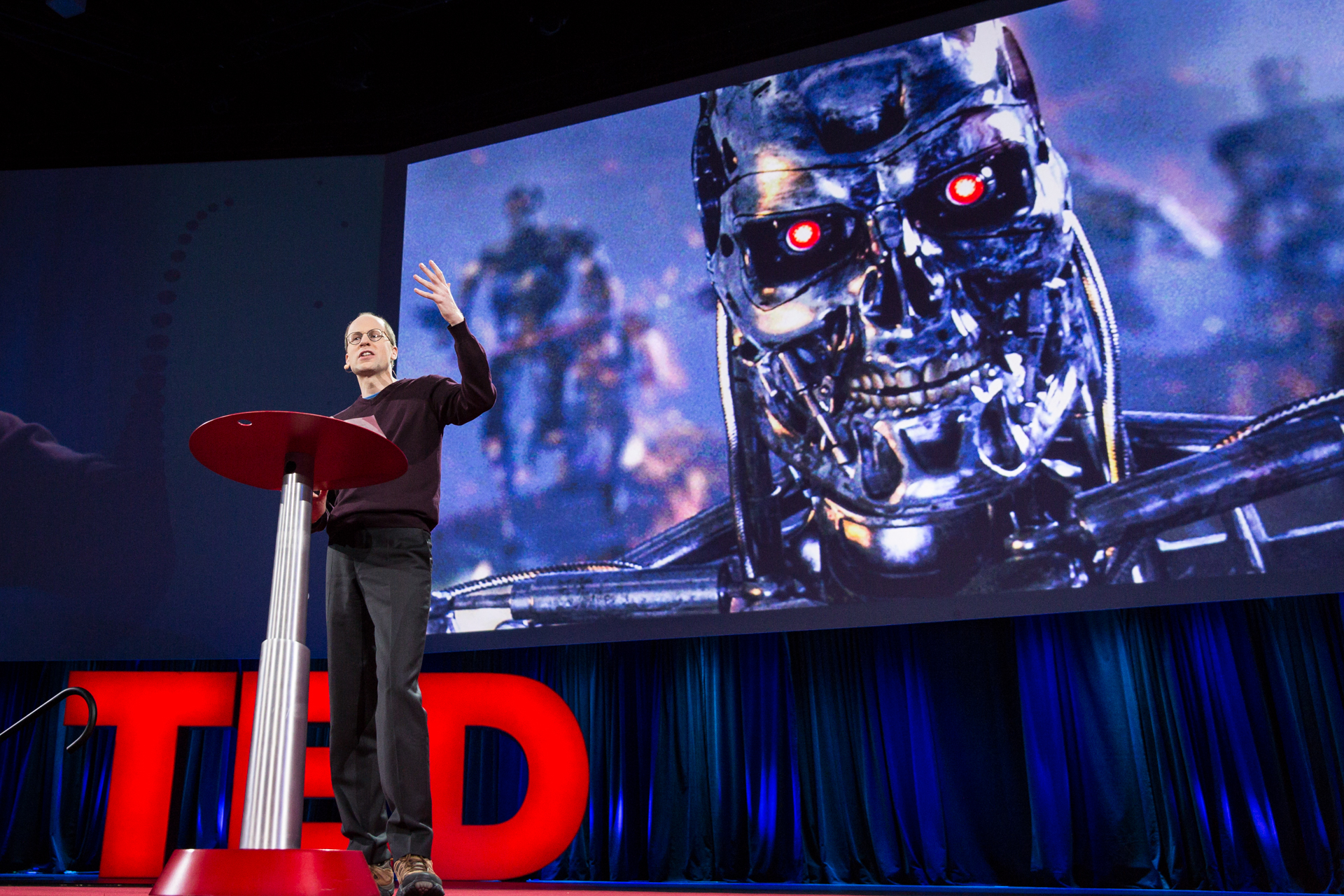

Nick Bostrom asks if (and when) our ever-smarter machines will start to outstrip human intelligence — and start using us instead of being used by us. Note: This is not the first Terminator reference made at TED2015. Photo: Bret Hartman/TED

Chris Anderson introduces Session 3 with a compelling idea: “Most of the new things happening right now that feel like magic — whether it’s self-driving cars or online translations suddenly getting much better — there’s machine learning behind it.” In this session we look at the issues around machines that learn.

The challenge of teaching a computer to see. How do you help a computer recognize a cat? It sounds easy — you program it to identify a creature with a round face, pointed ears, a furry body and a tail. But what about the cat curled up in a strange ball, or the cat barely peeking its eyes from behind a door? “Even a simple household pet has a million variations,” says Fei-Fei Li. The director of Stanford’s Artificial Intelligence Lab and Vision Lab, she has spent fifteen years trying to teach computers to see. Because, “to take pictures is not the same as seeing — seeing is really understanding,” says Li. “Vision begins in the eyes but truly takes place in the brain.” Li shares with us an insight that changed how she thought about this problem — recognizing that no one teaches a child to see, but that they learn by encountering real-world examples. By 3 years old, they can understand complex images. This insight led Li to help create ImageNet — a database of 15 million images across 22,000 categories of objects. (It has, for example, 62,000 cat photos.) “We finally tied all this together and produced one of the first computer vision models that is capable of generating a human-like sentence when it sees a picture for the first time,” says Li. But while this is incredible, the technology has far to go. “To get from age 0 to 3 is hard, but the real challenge is to get from 3 to 13 and well beyond.”

Mountains of moving dots. How do you quantify a world of movement? That’s the question that occupies computer scientist Rajiv Maheswaran and his team at USC, who have taught computers to model the movements of basketball players. They can model the basics, like passes and shots, but they’ve also been able to teach a computer more complex movements, like the pick and roll, a common offensive play between two players on offense and two on defense. By quantifying what’s normally only qualitative, Maheswaran is able to teach a computer subtle and ill-defined movement – in other words, to see with the eyes of a coach. This has led to insights on what makes good and bad shots, as well as the probability of shots and entire plays. Take, for example, the famous 2013 NBA final game between the Miami Heat and San Antonio Spurs, in which Miami was down by three points in the last twenty seconds and still won the game. Maheswaran calculates that this moment, one of the most exciting in recent basketball history, had a 9 percent chance of happening. “You don’t have to be a professional player or coach to gain insights about movement,” Maheswaran says. Indeed, he hopes that someday his work can be applied to all areas of life for a greater appreciation for the science of moving dots.

Chris Urmson runs Google(x)’s self-driving cars program — and makes the bold case that your next car should be driverless. Photo: Bret Hartman/TED

Why we need self-driving cars. “In 1885, Carl Benz invented the automobile,” says Chris Urmson, Director of Self-Driving Cars at Google[x]. “A year later, he took it out for a test drive and, true story, promptly crashed it into a wall.” Throughout the history of the car, “We’ve been working around the least reliable part of the car: the driver.” Every year, 1.2 million people are killed on roads around the world. And there are two approaches to using machines to help solve that problem: driver assistance systems, which help make the driver better, and self-driving cars, which take over the art of driving. Urmson firmly believes that self-driving cars are the right approach. With simulations that break a road down to a series of lines, boxes and dots, he shows us how Google’s driverless cars handles all types of situations, from a turning truck to a woman chasing ducks through the street. Every day, these systems go through 3 million miles of simulation testing. “The urgency is so large,” says Urmson. “We’re looking forward to having this technology on the road.”

The journey of a math nerd. We take a break from AI to sit down with mathematician and philanthropist Jim Simons. In a Q&A with TED’s curator, Chris Anderson, he takes us through his journey from NSA code breaker to immensely successful hedge fund manager to science education advocate. He takes a quick stop at dense theoretical research he worked on with Shiing-Shen Chern that, decades later, surprised Simons when it had implications for string theory and condensed matter. Simons’ mathematical mind helped him pioneer a new kind of modeling for the financial sector, and part of his success, he says, was to pursue knowledge. He says, “I didn’t really know how to hire people to do fundamental trading, but I did know how to hire scientists.” He jokes, “A lot of them did come because of the money, but they also came because it would be fun.” But he adds: “I’m not too worried about Einstein going off and starting a hedge fund.” Since the ’90s Simons has been giving back: He and his wife run the Simons Foundation, which provides support for research in basic sciences and mathematics.

In 98% of hack events, the hacker is located in a different country from the person who is hacked. Evan Tann and his team at Cloudwear discovered this fact by analyzing 10,000 hacks. They think this could be a key to combating cybercrime. Their Verified Location Technology “blocks and tracks hackers as they attempt to access data online.” But it doesn’t rely on IP addresses or other things that can be spoofed — it reads data from sources in the 1,500 feet around the hacker, the sources “outside of the hacker’s control.” This technology is being used by law enforcement and will soon be available for the rest of us.

The future of machine intelligence. Humans tend to think of the modern human condition as normal, says philosopher Nick Bostrom. But the reality is that human beings arrived on the planetary scene only recently – and from an evolutionary perspective, there’s no guarantee that we’ll continue to reign over the planet. One big question worth asking: when artificial intelligence advances beyond human intelligence (and Bostrom suggests it will happen faster than we think), how can we ensure that it advances human knowledge instead of wiping out humanity? “We should not be confident in our ability to keep a superintelligent genie locked up in its bottle,” he says. In any event, the time to think about instilling human values in artificial intelligence is now, not later. As for evolution itself? “The train doesn’t stop at Humanville Station. It’s more likely to swoosh right by.”

Let’s not demonize AI. Oren Etzioni of the Allen Institute has worked with artificial intelligence for 20 years and takes issue with those who suggest his work as “summoning the demon.” There is a big difference between intelligence and autonomy, he says: Developing one does not bring us to the other. He paraphrase Andrew Ng: halting AI research to keep computers from turning evil is like stopping the space program to combat overpopulation on Mars. Etzioni concludes, “Let’s not worry about far-fetched, fantastical scenarios. The bottom line is this, AI won’t exterminate us. It will empower us to tackle real problems and help humanity.”

You can watch this session, uncut and as it happened, via TED Live’s on-demand conference archive. A fee is charged to help defray our storage and streaming cost. Sessions start at $25. Learn more.

Comments (1)